Deep Seek, a leading Chinese AI company, recently released its R1 model, which rivals OpenAI’s O1 model. One of the key advantages of Deep Seek’s R1 is that it’s open-sourced, allowing users to download and run it locally. This guide will walk you through setting up and using the model with the oLlama framework.

Takeaways:

- Learn how to install and use DeepSeek AI on your local computer so you can use it offline

- Learn how to uninstall DeepSeek AI from your computer if you installed it locally

Table of Contents

How to Run DeepSeek R1 Locally - Install DeepSeek-r1 to Use Offline

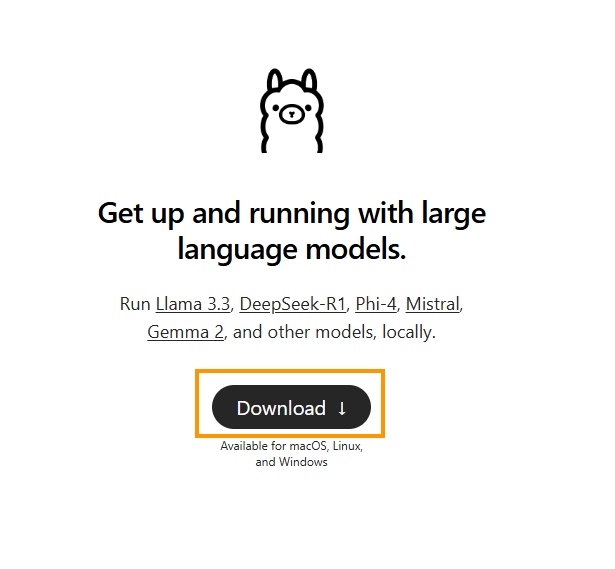

- First head on over to ollama and download the client.

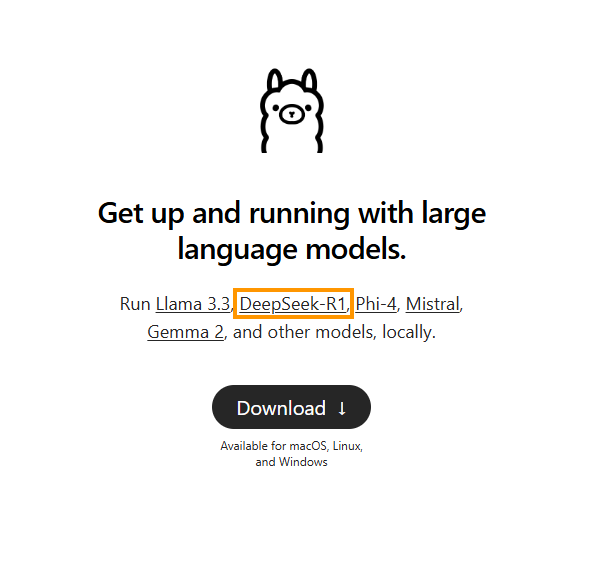

- Once you have Ollama installed go back to the main Ollama page and click the DeepSeek-R1 link above the original Download icon.

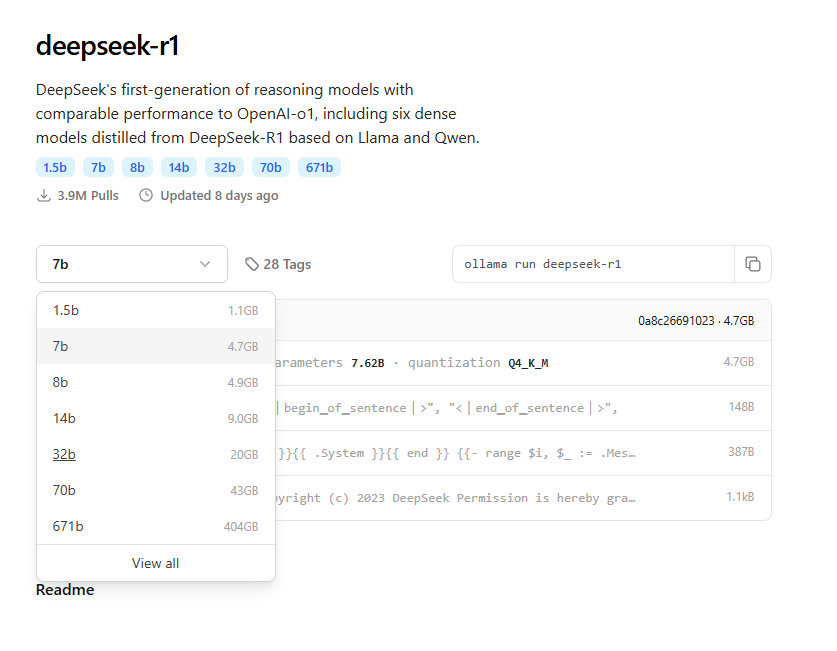

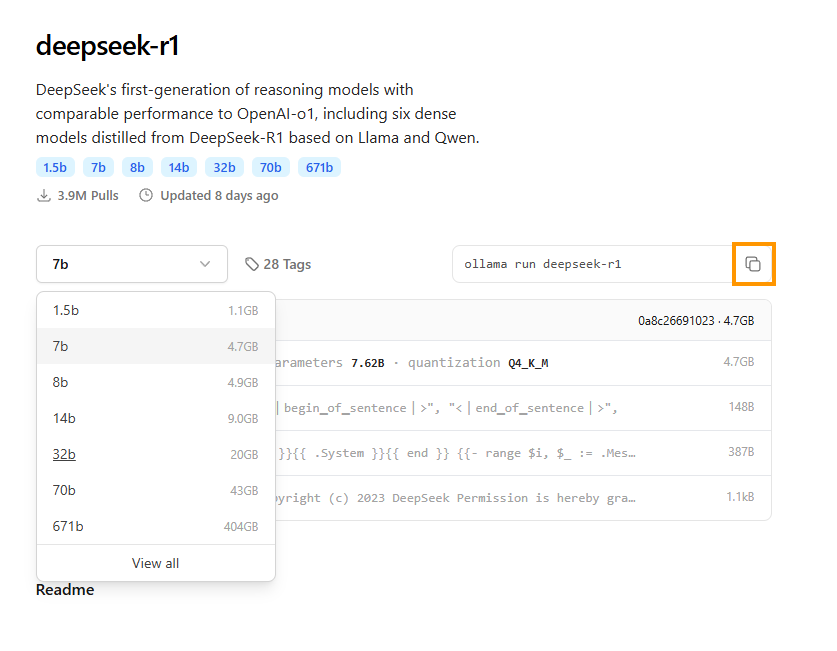

- This will take you to a new page, here select the model you want to use from the drop-down box. Just keep in mind that your hardware is going to limit performance so you'll probably want to stick with the 8b, 14b and 32b versions.

The R1 model is available in different parameter sizes:

-

Version under 32 Billion - More suitable for low-end Computers.

-

32 billion parameters (20 GB) — Ideal for high-end gaming Computers.

-

671 billion parameters (404 GB) — Requires specialized hardware to run effectively.

For this guide, we’ll use the 32-billion parameter version but you can choose whichever one you want. The steps don't change.

- Once you have used the drop-down menu to select the version you want click the Copy Code icon to copy the code.

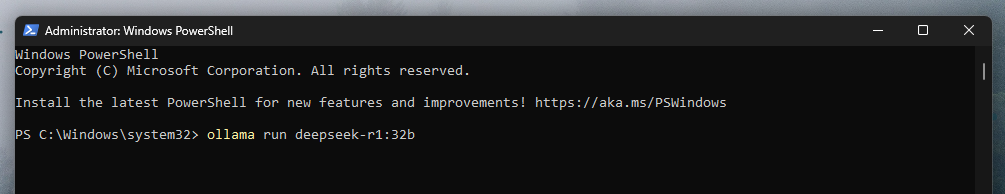

- Now open Powershell as an Administrator (search for it from the start menu). Then paste the code into the Powershell window and press Enter.

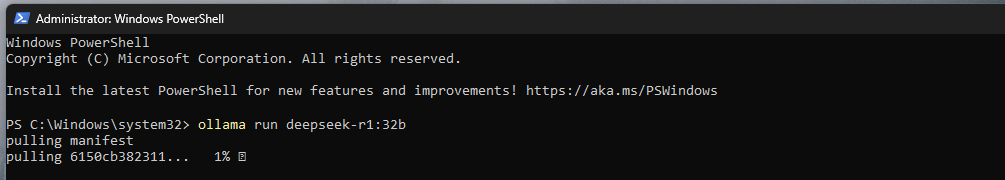

- You'll have to wait for it to download and install which will take quite a long time depending on your internet connection.

How to Use DeepSeek R1 Model Locally

While this tool is "Open Source" and "Fully Local" you should still keep in mind that it's Chinese based so expect your privacy to be limited. Unless your computer is entirely offline I wouldn't trust it completely. AI tools are known for having lowsy privacy policy rules and excel at harvesting data to continue training models. OpenAi ChatGPT isn't any different.

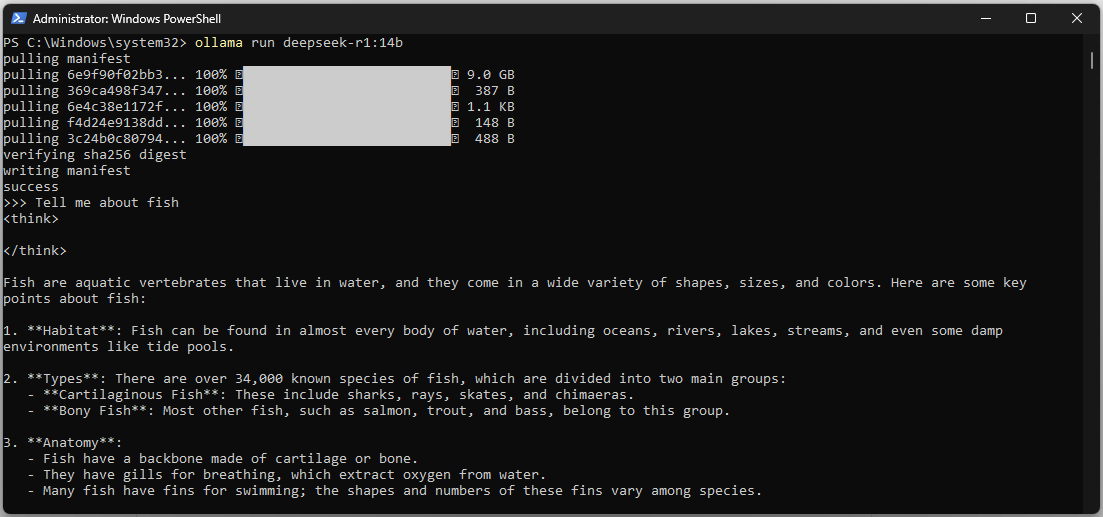

Once it is installed it will give you the option to start chatting with it. So just type in whatever you want and wait for it to reply. Your system hardware specs will determine how long it takes to reply.

- If you close the window and want to reopen it again, you'll need to open Powershell as Admin and then run the same command you used the first time. For example ollama run deepseek-r1:14b

- You can also have multiple tabs open in Powershell running multiple tasks just keep in mind this will usually split your hardware resources between both tasks so isn't really any faster.

Exploring DeekSeek-r1 Model Capabilities

-

The R1 AI model provides personalized responses with a "thinking aloud" approach, making it feel more human-like.

-

Its chain-of-thought reasoning allows it to logically break down problems and iterate on ideas.

-

Brainstorming: Generate creative ideas, such as brand names for a YouTube channel.

-

Programming: Ask the model to write code snippets.

-

Nuanced Conversations: Engage in complex discussions where the model seems to reason through answers.

Handling Slow Output Speeds

If you notice that the model runs slower than expected, consider these factors:

-

The 32 billion parameter model may run slower on standard hardware.

-

Wordy outputs can take longer to generate.

-

Use a high-performance GPU like the GeForce RTX 4090 for better speeds.

- To fix these issues on less powerful hardware download the 8b, 7b, or 1.5b models.

Filtering "Thinking Tags"

Sometimes the model includes "thinking tags" similar to HTML tags when reasoning through a query. So how do you filter Outputs.

-

Manually remove these tags from text output.

-

Automate this filtering by creating a script that processes the text before saving it.

Integrating DeepSeek with Applications?

For programmatic use, you’ll need an agent framework like LlamaIndex. Below is a short section on how to create a DeepSeek AI Agent.

Steps to Create an AI Agent:

- Install necessary libraries:

pip install llama-index langchain

- Write code to interact with the model programmatically:

from llama_index import GPTVectorStore

store = GPTVectorStore()

response = store.query("Tell a story for kids about space adventures.")

print(response)

Benefits of Using Open-Source Models

-

Cost-Free Usage: No subscription fees.

-

Full Control: Run the model locally without relying on cloud services.

-

Industry Importance: Promotes decentralization and prevents monopolies in AI.

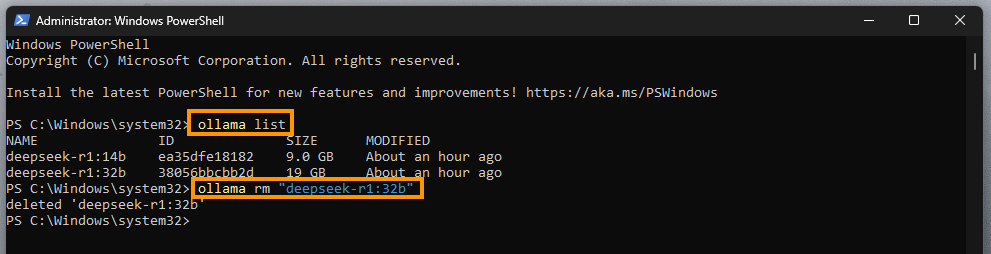

How to Uninstall DeepSeek R1 Models

In order to remove DeepSeek models, you'll need to do the following:

-

Open a terminal (PowerShell)

-

Run the following command:

ollama list

-

This will show the models you have installed. Now run the following command to remove any of the models you don't want. Just replace "deepseek-r1:8b" with the model you want to remove.

-

For example in my case:

ollama rm "deepseek-r1:8b"

- This will only remove the DeepSeek Models, you'll still have to remove ollama. But that can be done from the Control Panel like any other software.

Uninstall DeepSeek AI Using the Control Panel or File Explorer

-

Uninstall oLlama from Control Panel: Go to Control Panel > Programs > Uninstall a Program, select oLlama, and click Uninstall.

-

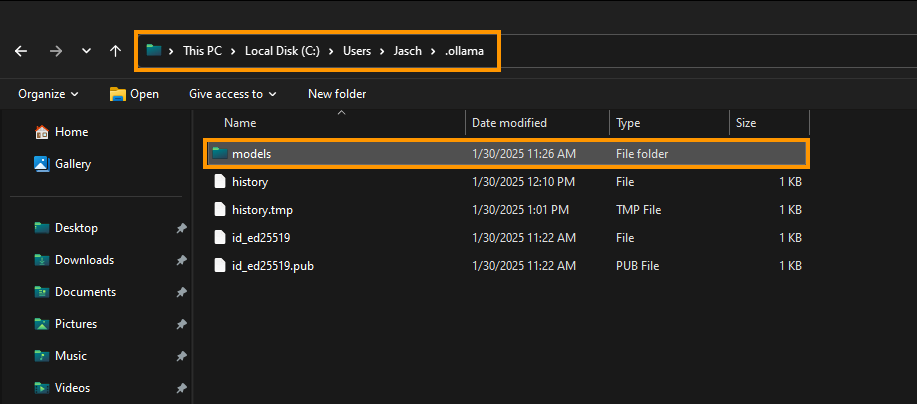

Manually Delete Model Files: Go to the following locations. Remember to swap out username for your username. Then just delete the models folder and you're good to go!

- macOS: ~/.ollama/models

- Linux: /usr/share/ollama/.ollama/models

- Windows: C:\Users\username\.ollama\models